Generative image inpainting with enhanced gated convolution and Transformers

Published in Displays, [https://doi.org/10.1016/j.displa.2022.102321], 2022

Min Wang, Wanglong Lu, Jiankai Lyu, Kaijie Shi, Hanli Zhao.

Abstract:

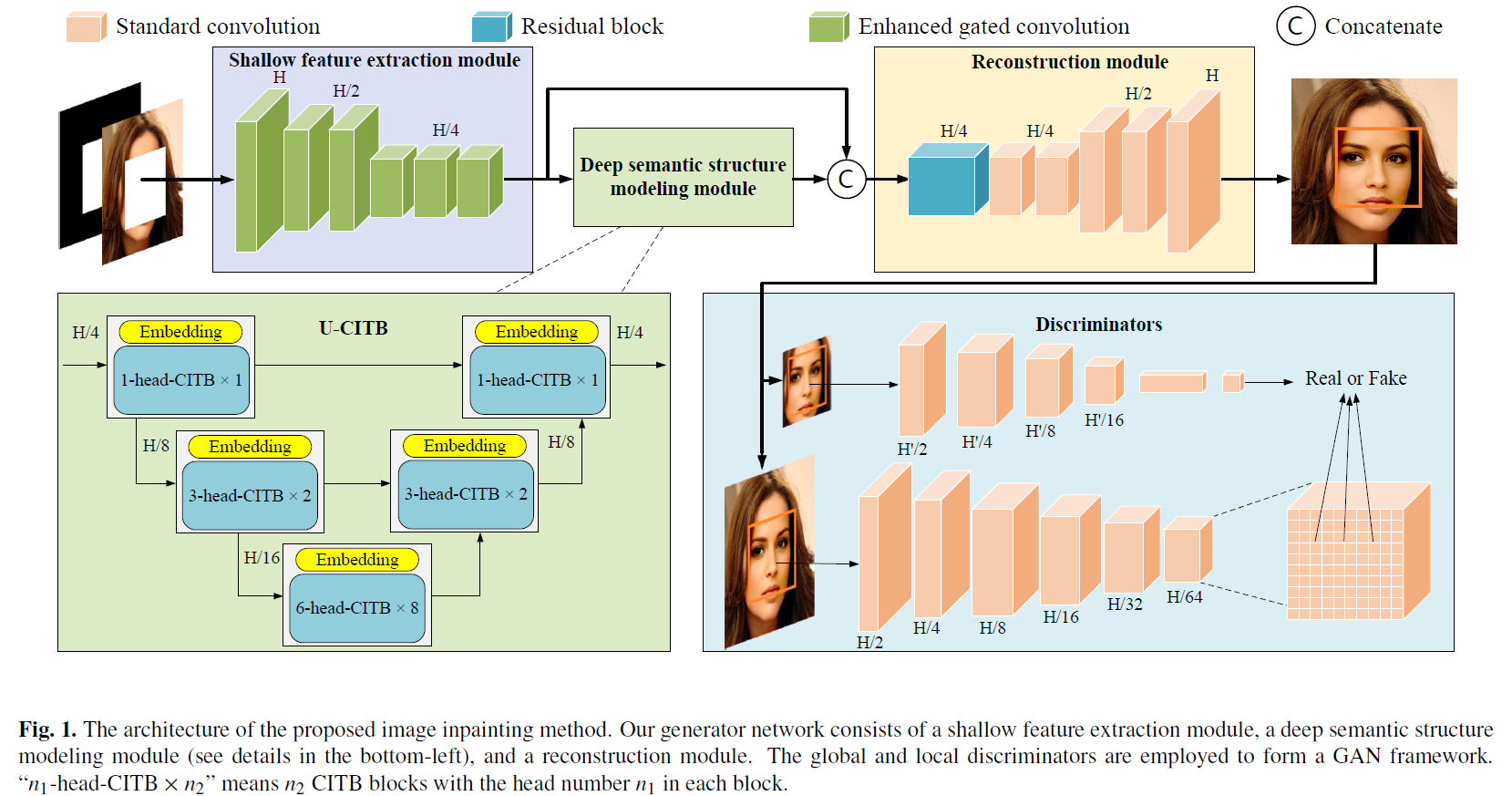

Image inpainting is widely used to fill the damaged or masked area in an image with realistic visual contents. However, most existing inpainting methods have limitations in reconstructing global structures for large-scale damaged areas. This paper proposes a novel high-quality generative image inpainting method by decomposing the generative network into three modules. First, an enhanced gated convolution is introduced to extract shallow features by making full use of the input mask and the gating mechanism. Second, a U-net-like deep semantic structure modeling module is presented by leveraging the Transformers’ strong ability of long-distance modeling and CNNs’ rich texture patterns learning abilities. Finally, a reconstruction module is employed to generate the inpainted result by combining shallow textural features and deep structural features. Extensive experiments on public datasets demonstrate that the proposed image inpainting method is able to produce high-quality visual e ects and outperforms state-of-the-art methods in many quantitative metrics.

Recommended citation:

Min Wang, Wanglong Lu, Jiankai Lyu, Kaijie Shi, Hanli Zhao, Generative image inpainting with enhanced gated convolution and Transformers,

Displays, 2022, 102321, ISSN 0141-9382, https://doi.org/10.1016/j.displa.2022.102321.