Probability-Based Channel Pruning for Depthwise Separable Convolutional Networks

Published in Comput. Sci. Technol. 37, 584–600 (2022), 2022

Han-Li Zhao, Kai-Jie Shi, Xiaogang Jin, Ming-Liang Xu, Hui Huang, Wang-Long Lu, Ying Liu.

Abstract:

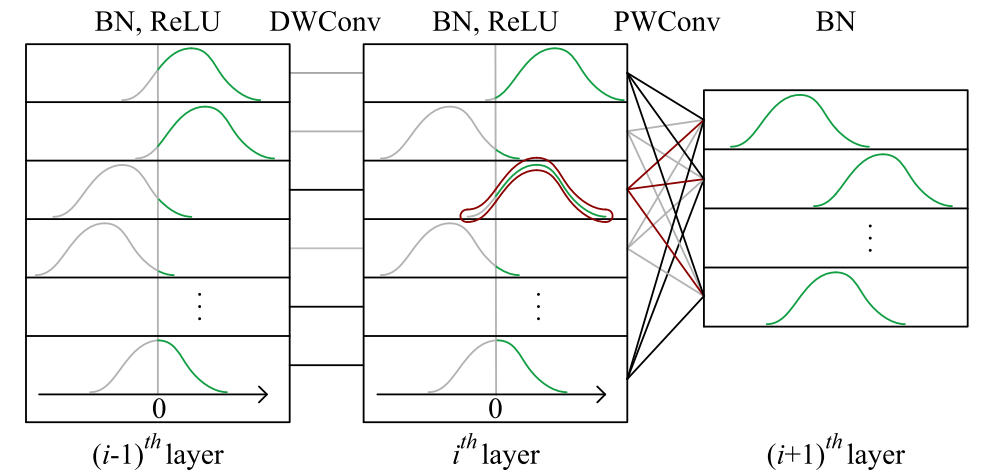

Channel pruning can reduce memory consumption and running time with least performance damage, and is one of the most important techniques in network compression. However, existing channel pruning methods mainly focus on the pruning of standard convolutional networks, and they rely intensively on time-consuming fine-tuning to achieve the performance improvement. To this end, we present a novel efficient probability-based channel pruning method for depthwise separable convolutional networks. Our method leverages a new simple yet effective probability-based channel pruning criterion by taking the scaling and shifting factors of batch normalization layers into consideration. A novel shifting factor fusion technique is further developed to improve the performance of the pruned networks without requiring extra time-consuming fine-tuning. We apply the proposed method to five representative deep learning networks, namely MobileNetV1, MobileNetV2, ShuffleNetV1, ShuffleNetV2, and GhostNet, to demonstrate the efficiency of our pruning method. Extensive experimental results and comparisons on publicly available CIFAR10, CIFAR100, and ImageNet datasets validate the feasibility of the proposed method.

Recommended citation:

Zhao, HL., Shi, KJ., Jin, XG. et al. Probability-Based Channel Pruning for Depthwise Separable Convolutional Networks. J. Comput. Sci. Technol. 37, 584–600 (2022).